We used ChatGPT and DALL·E to turn human sentiment into a digital work of art with Microsoft Research.

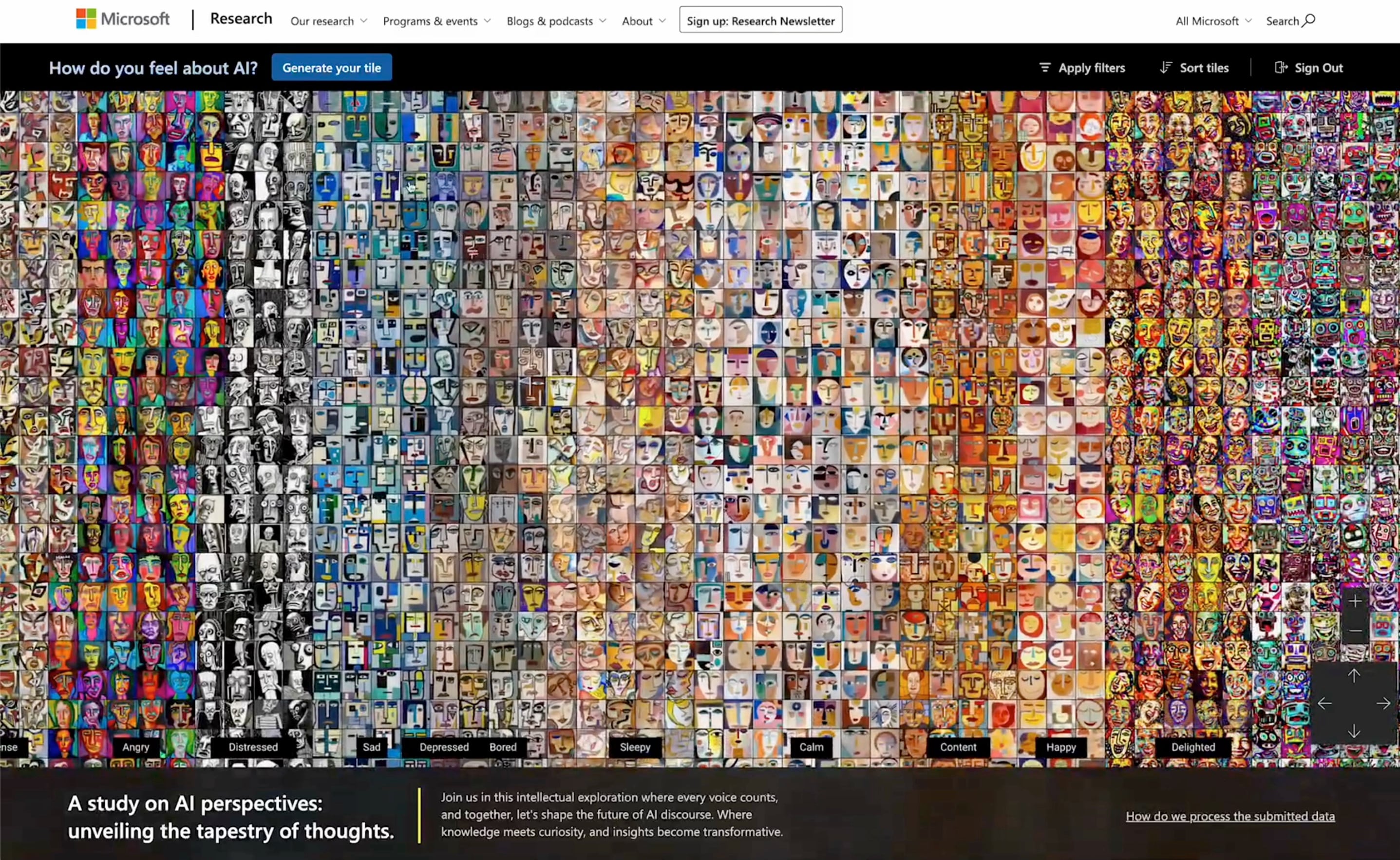

In mid-2024, Microsoft Research launched Mosaic: an interactive, AI-powered experience that visualized public sentiment about artificial intelligence. The internal experiment asked a deceptively simple question: “How do you feel about AI?” Using OpenAI’s models, it translated each participant’s answer into a stylized portrait, creating a living mural of collective emotion.

But Mosaic wasn’t a traditional software product; it was art, research, and experiment in equal parts. The vision originated with Asta Roseway, a principal researcher known for blending science and creativity inside Microsoft. She imagined an expressive, data-driven experience that could help people reflect on how AI made them feel. Fueled was brought in to bring that vision to life.

As a long-time Microsoft partner with deep experience shipping production-grade applications, we were trusted to translate a bold conceptual idea into a scalable, emotionally resonant digital experience.

From Vision to Interaction

The challenge wasn’t just to build a site, but to create something that felt like art. Asta had a clear conceptual direction, but questions remained: How could the experience feel cinematic? How could emotion become navigable? How could abstract data create a sense of connection?

Fueled worked hand-in-hand with the Microsoft Research team to co-evolve the idea. Together, we framed Mosaic not as a dashboard or gallery, but as a unified, immersive canvas—a place where each visitor’s feelings would be visualized and positioned alongside thousands of others.

The design avoided traditional web conventions. No scrolling, no text-heavy layouts. Just one large, panning interface, built around AI-generated portraits, that invited exploration. Every interaction was intentional, from the camera movement to the micro-animations.

We selected Microsoft’s Fluent UI system as the base design framework. It offered built-in alignment with Microsoft’s brand and web standards, which helped us move quickly and confidently. With a fast-moving timeline and evolving AI landscape, Fluent UI let us spend less time on scaffolding and more on what made Mosaic distinct.

From there, we extended Fluent UI’s dark mode with custom layered glass effects, and motion-driven transitions that deepened the sense of presence. It moved beyond UI and became an environment.

AI at Work: Sentiment Analysis Meets Generative Art

On the backend, we deployed two models hosted in Azure OpenAI Services. First, ChatGPT analyzed each participant’s response and interpreted the sentiment—emotions like hopeful, anxious, or curious—along with how strongly the participant seemed to feel it. That structured sentiment was then paired with style instructions, and passed to DALL·E to generate a visual portrait that matched the emotional tone.

Making that process feel seamless required more than just calling APIs. We designed a system that could translate a wide range of inputs into meaningful emotional categories, then guide the models to generate visuals that felt aligned. For example, we wanted responses like “a little nervous” and “totally terrified” to result in portraits that looked appropriately different.

We also tackled edge cases; responses like “not sure” or “it depends” that didn’t clearly map to an emotion. We created fallback logic to ensure those still led to expressive, appropriate outputs, rather than something random or generic.

And since many people shared similar feelings about AI, we needed to avoid visual repetition. We introduced controlled randomness into the image generation process, so even if two participants were both “hopeful”, their portraits would still look distinct.

Finally, we built in safeguards to protect against misuse and prompt manipulation, ensuring the experience stayed safe, respectful, and creative.

Engineered for Performance and Emotion

Mosaic had to feel immersive. That meant building something fast, fluid, and deeply responsive, even while rendering thousands of AI-generated images in real time.

Fueled developed the experience as a custom React and Next.js application. We prototyped several rendering approaches before selecting Three.js, a modern WebGL framework capable of delivering the high frame rates required to create a cinematic, emotionally resonant experience. Paired with React Fiber and the Drei helper library, it gave us the performance and control needed to bring Mosaic to life.

From the outset, we treated performance as a design requirement. A low frame rate would break immersion. A lagging canvas would flatten the emotional impact. We fine-tuned camera behavior, zoom logic, and tile animation to keep motion smooth and natural across devices.

To support that level of responsiveness, we engineered custom debugging tools that let us visualize canvas behavior in real time. These tools helped us optimize draw calls, bounding box detection, and frustum culling, ensuring only visible tiles were rendered at any moment.

We also built custom lazy loading logic based on camera position. As users explored the canvas, portraits loaded in seamlessly. Images were stored and cached using Azure Blob Storage to ensure reliable delivery and scalability as the dataset grew.

This wasn’t just a performant front-end. It was performance in service of emotion, carefully tuned to make the experience feel smooth, interactive, and alive.

More Than a Mosaic

Mosaic ultimately generated over 2,000 AI-generated portraits. But the numbers weren’t the point. What mattered was the feeling.

The project was showcased internally across Microsoft, earning attention not just for its technical execution, but for its human-centric perspective. In a company defined by engineering, Mosaic introduced a different lens, showing how AI could be used to explore emotion, not just optimize productivity.

Technically, Mosaic stood up to enterprise expectations. The site was designed to perform at scale, delivering a smooth experience across thousands of images and user interactions. Our engineering team worked within Microsoft’s infrastructure, built on Azure, and quickly met internal compliance requirements.

This was never about launching a product. It was about creating a moment. A spark. A prototype that invited researchers, designers, and technologists to think differently about what AI can evoke—and how we might connect through it.

It was, at its core, a shared experiment in digital expression. One that the Fueled team was proud to help bring it to life.